AMA with Pete Lee, CRO of Zingtree: Flexibility, Architecture & AI You Can Trust

Zingtree CRO Pete Lee shares a technical POV on building AI that’s flexible, safe, and built for real enterprise complexity. From architecture to agentic AI, this AMA unpacks what it takes to automate without losing control.

Zingtree CRO Pete Lee shares a clear, technical view of what it takes to successfully implement AI-powered automation in complex, regulated environments. In this AMA, he explains why deterministic AI beats “black box” approaches, how business logic and guardrails are essential for safe deployments, and why flexibility, not generality, is the key to sustainable CX automation.

AMA with Pete Lee

How do you describe what Zingtree does?

Zingtree helps enterprise organizations solve the most complex problems in highly regulated industries using automation and AI, but in a deterministic, logic-driven way.

How has customer complexity evolved over the past few years?

- Customer expectations are higher: People do more research before contacting support, and they expect fast, frictionless service like they get from Amazon.

- Product complexity has increased: Many offerings are more technical, with layered configurations and support requirements.

- Operational environments are harder to manage: With globalization, regulatory pressure, and compliance requirements, the systems that support CX are more fragmented and interdependent.

The result? More edge cases, more risk, and more pressure to get it right the first time.

What’s one common misconception about Zingtree?

That logic-based systems like workflows are obsolete in the age of AI.

The truth is, logic matters more than ever. Generative AI may sound smart, but without clear business rules and contextual inputs, it creates risk: hallucinations, wrong answers, and inconsistent outputs.

Zingtree’s logic engine ensures that AI only operates within structured, governed workflows, where the business defines the guardrails .

What makes Zingtree different from other AI vendors?

Zingtree doesn’t offer a vague “AI assistant” or generalized chatbot. We’re solving real, regulated, and high-risk use cases — where compliance, control, and explainability matter.

That means:

- Applying AI at the right moment in a workflow

- Giving business and IT the tools to define guardrails and fallback logic

- Supporting both agent assist and customer-facing flows

- Enabling data retrieval from backend systems in real time

- Delivering structured automation, not just surface-level interaction

We’re logic-first, not language-first — and that distinction is critical in enterprise environments .

How does Zingtree ensure AI is safe for high-stakes environments?

Through process-driven AI governance.

If you don’t define guardrails, both humans and AI will go off the rails. We align business and IT stakeholders to create a shared logic layer, then allow automation and AI to operate within those boundaries.

This reduces:

- Variance in how cases are handled

- The risk of hallucinated or non-compliant responses

- Over-reliance on opaque LLM behavior

We’re bringing process integrity to AI deployments — especially important for healthcare, insurance, and financial services .

What would you say to a buyer considering building in-house?

Most IT teams want governance, and they’re right to want it. But the business also needs speed to value.

Zingtree offers a hybrid model:

- IT can own and maintain logic structures

- Business teams can build and adapt flows using no-code tools

- Everyone stays aligned, compliant, and fast

You don’t have to choose between control and velocity. With Zingtree, you get both.

How does Zingtree support flexibility across systems and use cases?

AI isn’t one-size-fits-all.

You need to meet your user — whether it’s an agent, consumer, or field rep — in the right channel, at the right time, with the right data.

Zingtree supports:

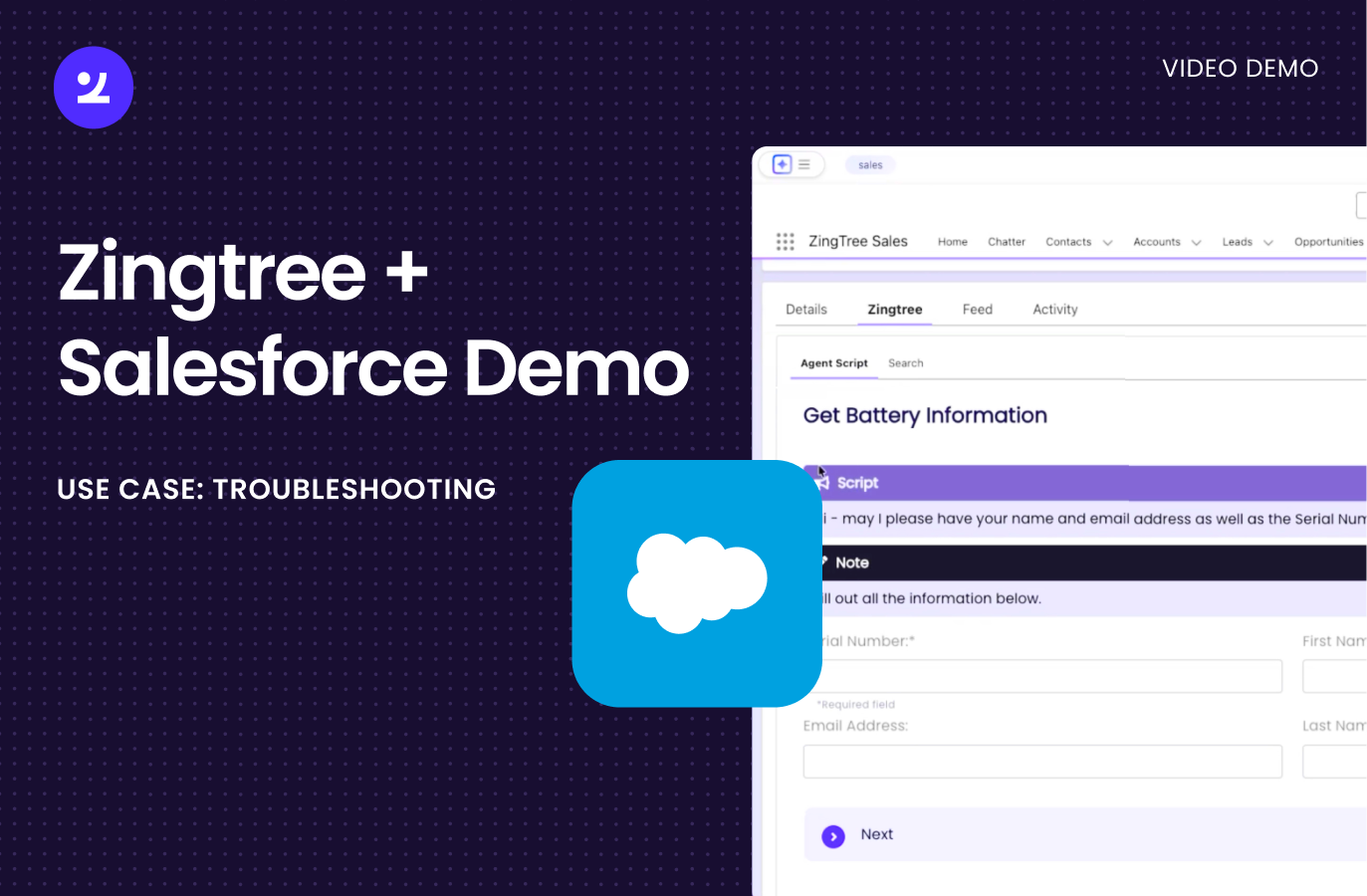

- Digital, voice, and agent-assisted workflows

- Multiple LLMs and prompt libraries

- External APIs to pull real-time data

- Structured flows that blend AI, human input, and business rules

This is how AI orchestration actually works: integrating logic, content, and system data to deliver outcomes that are accurate, compliant, and fast .

How does Zingtree give customers control over AI output?

By keeping the logic layer separate from the model.

Zingtree lets customers:

- Control when AI is triggered

- Set thresholds based on confidence scores

- Apply fallback logic if AI responses don’t meet criteria

- Use their own LLM license, prompts, and data boundaries

- Keep the AI walled in, ensuring it doesn’t hallucinate or access external context

It’s deterministic AI: automation that operates predictably, explainably, and within business-defined rules .

What’s on the roadmap for Zingtree?

We’re moving toward agentic automation, where AI components can act semi-independently, but still within a governed workflow.

That means:

- Structured, rules-based orchestration

- Seamless handoffs between customers, agents, and AI

- Real-time context switching based on intent and data

- AI that can act, escalate, or adapt — but never guess

The goal is to build an AI orchestration layer that doesn’t just support automation—it defines how automation happens, under full business control

.webp)

.svg)

.svg)

.svg)

.png)